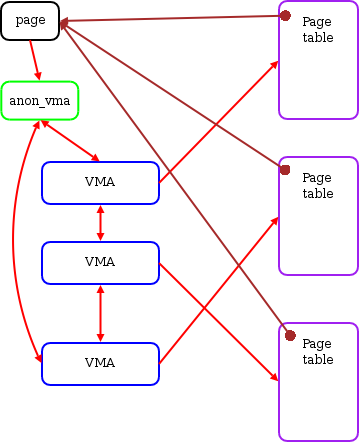

Each page folio contains a mapping pointer,

connecting it back to where the folio belongs.

Here we only focus on the folios that are in some way accessible to userspace, including anonmyous, file and shmem folios. Other folio types including slab, page_pool and zone device folios are mainly for kernel memory allocation, network stack and special device management. They are subsystem implementation specific and has no interest to userspace. However, the first three types of folios are important enough to be reflected in the design of

struct folio. Only the layout of the first three is directly listed instruct folio. If you want to work with other types of folios, you have to revert back to the old-fashioned "union"-edstruct page.

This mapping pointer is necessary.

For anonmyous folios, we can find which tasks have them mapped.

For file folios, we can know which files (i.e. inodes) they belongs to.

And for shmem folios, they can also be used to find the files they belongs to just like file folios.

These similarities and differences can be reflected by the structure the mapping pointer points to.

For anonmyous folios, they points to struct anon_vma.

And for file and shmem folios, they points to struct address_space.

The owning files/inodes can be identified by the host pointer inside address_space. File and shmem mappingscan further be distinguished by address space operationsa_opsfield. Shmem folios haveshmem_aopsand file folios have thier filesystem specifica_ops, such as btrfs_aops, ext4_aops, f2fs_dblock_aops` and etc.

The address_space is pretty straightforward.

What's more interesting is anon_vma and how to locate all the tasks or pagetables that map the folio.

The problem: find all mapping pagetables from a given folio

- Background: when swap a anonmyous folio to disk, the kernel needs to modify all pagetables having the given folio mapped to ensure consistency.

- Naive solution (

pte_chain): just add another field recording a linked list of all pagetables mapping the folio. However, there are two problems:- memory wastage: 8 bytes for every 4KiB memory.

fork()performance: when forking a new child process, every folio in the parent process has to be modified to record the new child's pagetable.

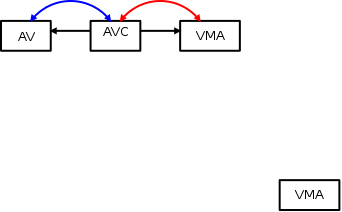

- Almost there (naive object based reverse mapping): add an indrect layer.

For file folio, each folio is owned by a file (hence "object" based reverse mapping).

So, we can inspect the file via the

mappingpointer for file folios. And for anonmyous folio, each folio is owned by a VMA. We can make themappingpointer points to an indirect layer calledanon_vmastructure. Theanon_vmahas a linked list of VMAs that contain the folio. However, there is still a subtle problem:- "Phantom" VMA: child process might allocate a new folio

for a previously CoW mapped parent folio when writing.

However, the child's VMA would still be linked in the old folio's

anon_vma. Which might result in the VMA linked list contains unrelated VMAs.

- "Phantom" VMA: child process might allocate a new folio

for a previously CoW mapped parent folio when writing.

However, the child's VMA would still be linked in the old folio's

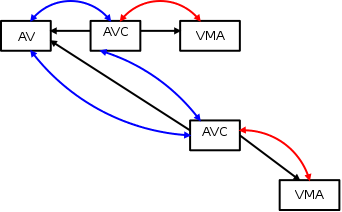

- Current solution (object based reverse mapping): add another indirect layer.

Now, the AV0 (

anon_vma) has a linked list of AVC0 (anon_vma_chain). AVC0 then points to the VMA0. When forking, new VMA1 is created and its associated AVC1 is also created and linked to the AV0. When allocating a new folio for the child due to writing to a CoW folio, the old AVC1 is deleted to disconnect the old folio to the child's folio. The new folio's AV1 will then be added with a new AVC2 to connect to the child's VMA.

For my opinion, the third solution is too excessive. Even Linus complained about it. The 2nd would be the best considering the clarity and simplicity.